Using theBloke's Mistral7b model from Hugginface in your local environment with Ollama

Here is the step by step instruction on how to use the LLM quantized version locally using Ollama

Introduction

Large Language Models (LLMs) are fascinating, but their hardware requirements can be intensive. Model quantization techniques offer a solution, enabling local LLM execution. In this blog, we'll explore quantization methods (GGUF specifically) and set up the Mistral 7B LLM using Ollama.

Why Choose Mistral 7B

Here's why Mistral 7B is a compelling choice for local use:

- Resource-efficient: GGUF quantization allows it to run on machines with modest GPU resources.

- Performance: Offers strong results despite quantization.

- Accessibility: Easily found on Hugging Face and works seamlessly with Ollama.

Getting Started

1. Downloading Mistral 7B

Thanks to TheBloke, we have been spoilt with the GGUF models in different quantization based on our need. He usually uploads the model in few days in Huggingface! You can get your chosen Mistral 7B variant from Hugging Face. Download a quantization based on how much RAM you have in your machine. Apple Macs perform better than PC because of the way the memory bandwidth is architected in M chips. Here's how to use the CLI:

huggingface-cli download TheBloke/Mistral-7B-Instruct-v0.1-GGUF mistral-7b-instruct-v0.1.Q8_0.gguf

--local-dir models/ --local-dir-use-symlinks False

You should be completely fine to go the theBloke/Mistral-7B-Instruct-v0.1-GGUF huggingface page and download it yourself.

2. Installing Ollama

If you don't have Ollama, go to the Ollama website and download the package for mac / pc. They just launched the Windows support! It is in Preview!

You can also install it through curl (Linux example):

curl https://ollama.ai/install.sh | sh

3. Creating the Modelfile

A Modelfile tells Ollama how to use the gguf llm model and the characteristics about it. Here's a sample Modelfile for Mistral 7B (adjust as needed, frankly i was able to get this working with just the FROM value):

FROM models/mistral-7b-instruct-v0.1.Q8_0.gguf

PARAMETER num_ctx 3900

PARAMETER temperature 0.7

PARAMETER top_k 50

PARAMETER top_p 0.95

PARAMETER stop "<|system|>"

PARAMETER stop "<|user|>"

PARAMETER stop "<|assistant|>"

PARAMETER stop "</s>"

TEMPLATE """

<|system|>\n system

{{ .System }}</s>

<|user|>\n user

{{ .Prompt }}</s>

<|assistant|>\n

"""

SYSTEM """You are an AI assistant for users to find information. If you don't know something, don't make stuff up. Be kind and empathetic"""

Using the model downloaded for different application

I already had models downloaded for LM Studio. I didn't want to waste the space by letting Ollama download model files again. So I went to the folder where LM Studio downloaded the Mistral 7b model. Created the model file for Ollama referencing the path of the gguf model. Open the terminal in the folder & use the following ollama commands with the modelfile. Thats it.. This allows you to use the same model file for LM Studio & Ollama. You can definitely save some hard disk space!

4. Building the Model

Remember the name you create for the model name in the create command. You will use this while consuming the model hosted through Ollama. Keep it short and unique.

ollama create mistral-local -f Modelfile

You can see the list of models in Ollama using

ollama list

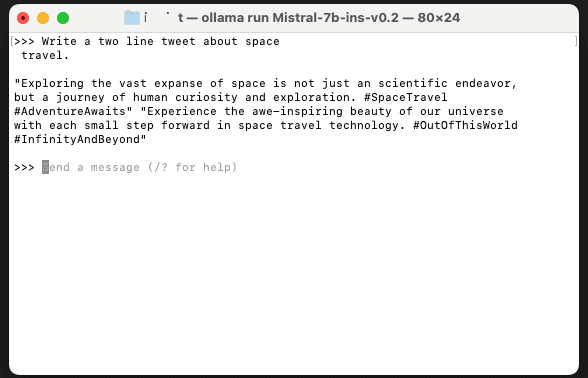

5. Running the Model

ollama run mistral-local

Example Interaction

>>> Write a two line tweet about space travel.

"Exploring the vast expanse of space is not just an scientific endeavor,

but a journey of human curiosity and exploration. #SpaceTravel

#AdventureAwaits" "Experience the awe-inspiring beauty of our universe

with each small step forward in space travel technology. #OutOfThisWorld

#InfinityAndBeyond"

>>>

Remember: To delete the model you can use this command ollama rm mistral-local.